Overview

The dimensions of data quality are a qualitative tool that helps assess the quality of data. Many publications suggest different factors to take into account, and some dimensions have a general consensus. Specifically, the DAMA framework defines six universally valid core dimensions. Despite that, several publications list more than 60 dimensions in the literature. This shows how challenging it is to generalize the data quality problem into a set of universal rules or a magic formula. Data quality measures, while useful, need to be adapted not only to the technical context but also to the specific business context. The requirements for data usage, the goals of data processing, and the risk levels involved are all factors to consider when choosing dimensions and their acceptance criteria.

The six core data quality dimensions are accuracy, completeness, consistency, uniqueness, timeliness, and validity. These dimensions help to measure and improve the quality of data for different purposes and contexts. Here is a brief introduction to each dimension:

This dimension examines how closely the data corresponds to the real-world objects or events it represents.

Data accuracy can be measured by comparing the data values with a physical measurement or physical observations. Another way to measure accuracy is by simply comparing actual values to standard or reference values provided by a reliable source. Usually, accuracy depends on how good are the processes in gathering and validating data.

This dimension measures how much of the expected data is available and not missing. It can be assessed by checking for null values, mandatory fields, or missing reference data.

Measuring completeness properly can be challenging because you have to outline the business impact of a missing piece of information. For example, a missing description for an e-commerce item has a customer-facing impact and has to be taken into account more seriously than the absence of other pieces of information. As already said, data quality has to take into account the purpose and context of the data.

This dimension measures how well the data conform to a common standard or format across different sources or systems. It can be assessed by checking for conflicts, contradictions, or anomalies in the data.

Measuring consistency may involve checking data inside the same system as well as comparing datasets across different technologies. Often it involves also infrastructural concerns such as alignment between different systems, and checking the overall consistency of two different datasets both on a row level and a dataset level perspective.

This dimension measures how well the data avoids duplication or redundancy. Uniqueness is important for data quality because it ensures that each entity or object is represented only once and consistently in the data. Duplicate records can cause confusion, errors, inefficiencies, and waste of resources.

Duplicates can arise from different sources and have different impacts on data quality. Duplicates that arise from errors in the data pipelines are usually caused by technical issues, such as incorrect joins, faulty transformations, or inconsistent identifiers. These duplicates can affect the consistency of the data and lead to misleading or erroneous analysis. For example, if a customer record is duplicated due to a faulty join in the data pipeline, it may inflate the sales figures or distort the customer segmentation.

Duplicates that arise due to the process or business model are usually caused by operational or organizational factors, such as multiple data entry points, lack of standardization, or different business rules. These duplicates can affect the uniqueness and validity of the data, and create confusion or inefficiencies in the business processes. For example, if a customer has multiple records due to different email addresses or phone numbers, they may receive duplicate marketing emails or invoices, or they may be contacted by different sales representatives.

Uniqueness can be assessed by checking for duplicate records, keys, or identifiers. A record can be a duplicate even if it has some fields that are different. Defining the rules for ensuring data uniqueness and detecting duplicates is usually dependent on the context and the purpose of the analysis: rules defined for a business may not apply to another one. For example, a customer record can be duplicated if it has different email addresses or phone numbers, but the same name and address: this could seem to be a good deduplication rule but may have compliance implications according to the usage and the purpose of the data analysis.

This dimension measures how current and up-to-date the data is. It can be assessed by checking for timestamps, expiration dates, or frequency of updates.

Timeliness and freshness are two related but distinct data quality dimensions. Timeliness measures how current and up-to-date the data is with regard to processes, while freshness measures how current and up-to-date the data is with respect to the present moment. Both dimensions depend on the frequency and speed of data updates, but they also depend on the expectations and needs of the data users.

For example, suppose you have a data set that contains the daily sales of a product. If you want to use this data to create a monthly report, then timeliness is more important than freshness. You need the data to be available and accurate by the end of each month, but it does not matter if it is a few days old. However, if you want to use this data to monitor the inventory levels and adjust the production accordingly, then freshness is more important than timeliness. You need the data to reflect the current situation as closely as possible, but it does not matter if it is delayed by a few hours.

Timeliness and freshness are both important aspects of data quality, but they may have different implications and trade-offs depending on the context and purpose of data usage. Data users should define their requirements and expectations for these dimensions and communicate them clearly to data providers and stewards.

This dimension measures how well the data conforms to the business rules, calculations, or expectations.

It can be assessed by checking for data types, ranges, formats, or patterns. The business glossary is the tool where business rules as well as range and patterns should be defined.

These dimensions are widely accepted, but they are not comprehensive. Other dimensions may apply to specific contexts from various perspectives, such as currency, conformity, and integrity. They may refer to governance aspects as well as infrastructural ones. The selection of dimensions and their acceptance criteria depends on the requirements and goals of data usage.

Process

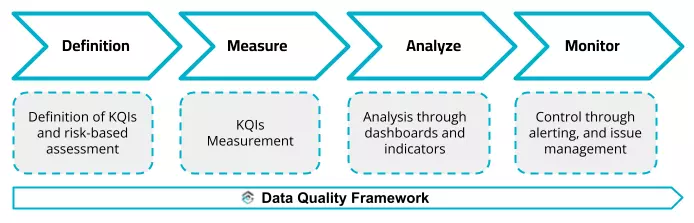

Let’s see how the data quality dimensions can be used in a data quality management process. A typical approach in managing a data quality management strategy can be described in the following points:

- Identification of the data that needs to be checked, typically those critical for operations and reporting.

- Identification of the data quality dimensions and their acceptance thresholds.

- Measurement of each of the identified dimensions.

- Review of the collected results.

- Application of corrective measures.

- Monitoring of trends and repetition of the process with possible extension to other data of interest.

The effectiveness of the process is therefore closely linked to the fact that the data quality rules are adequate to what are the requirements of the specific business activities. As we will see shortly, Blindata offers support for the implementation of this type of framework.

KQIs

Definition

The Blindata Data Quality module offers a framework to enable a data quality management process like the one just illustrated and based on the definition of Key Quality Indicators, their measurement, and active monitoring. The module is conceptually divided into two sections. The first section has a functional nature, whose reference user is the Data Quality Analyst. The Data Quality Analyst has the task of identifying which Key Quality Indicators must be defined, also taking into account the needs of the business. The second section, of a technical nature, has as its reference user the Data Quality Technician. His profile is such that he knows the systems, processes, and data flows of the organization and is therefore able to query them appropriately to implement all the KQIs defined by the analysts. The Blindata Data Quality module is designed to integrate with the Business Glossary and Data Catalog modules so that you can share the collected KQIs and their results within the organization.

In Blindata, the measurement of KQIs is based on the calculation of a metric that represents the value of a certain quality dimension for certain data. Some examples are:

- The number of events collected in an hour may represent the freshness of the data.

- The number of elements with a certain field not filled in may identify how complete the collected data are.

- The value of a calculated field that does not reflect the value of the starting fields, may be a measure of how consistent the data are.

- The count of the rows of two distinct datasets across two different systems may represent an indicator of consistency and infrastructural alignment.

Starting from the extracted metric, the Blindata framework calculates two different synthetic indicators. The first indicator is the score, a value from zero to one hundred that identifies the positioning of the KQI value with regard to an expected value. In other words, the score measures the goodness of a KQI. Based on the score and thresholds defined by the user, each KQI is assigned a further synthetic indicator as a traffic light.

Metrics

Gathering

The Blindata Data Quality module has been designed to be flexible and support the most diverse integration scenarios. Depending on the nature of the checks and the maturity and digitalization of the organization, we can deal with different ways of collecting metrics:

- for example, in case of complex checks that maybe have to be performed only sporadically, such as those checks related to regulatory compliance elements, it is possible to manage the collection of metrics manually through the Blindata interface

- if there are already data processing flows and processes in the company that extract quality metrics or if there are already data quality checks, automated or not, it is possible to export the results to Blindata via API

- if the data quality initiatives are starting from scratch, the automation of metric collection can be managed completely by the platform with the help of quality probes.